Energy efficiency in AI training is a critical area of focus due to the high energy consumption associated with training deep learning models. Here are some key strategies and developments aimed at improving energy efficiency in AI training:

-

:

-

Techniques like model pruning, quantization, and knowledge distillation help reduce model complexity, leading to lower energy consumption during training and inference.

-

and Efficient Network Architectures are also being explored for their potential to reduce computational demands.

-

-

:

-

: Using GPUs and TPUs designed for AI workloads can optimize energy use compared to general-purpose CPUs.

-

: Adjusting hardware power consumption based on workload requirements can significantly reduce energy waste.

-

-

:

-

: Ensuring high-quality data reduces unnecessary training cycles and model complexity, thereby lowering energy consumption.

-

: These methods minimize the need for large datasets, reducing data acquisition and storage costs.

-

-

:

-

Implementing algorithms that dynamically adjust training processes based on energy efficiency metrics can optimize resource allocation and reduce energy consumption.

-

-

:

-

Prioritizing renewable energy sources for data centers and promoting the reuse of existing models instead of retraining from scratch are key sustainability strategies.

-

-

: Researchers have developed a method to predict computational and energy costs for updating AI models, enabling more sustainable planning and decision-making.

-

: Studies have shown that early stopping during model training can reduce energy consumption by up to 80%, highlighting the potential for significant energy savings.

-

: As traditional computing faces physical limits, innovations like quantum computing and novel chip architectures may offer future efficiency gains.

-

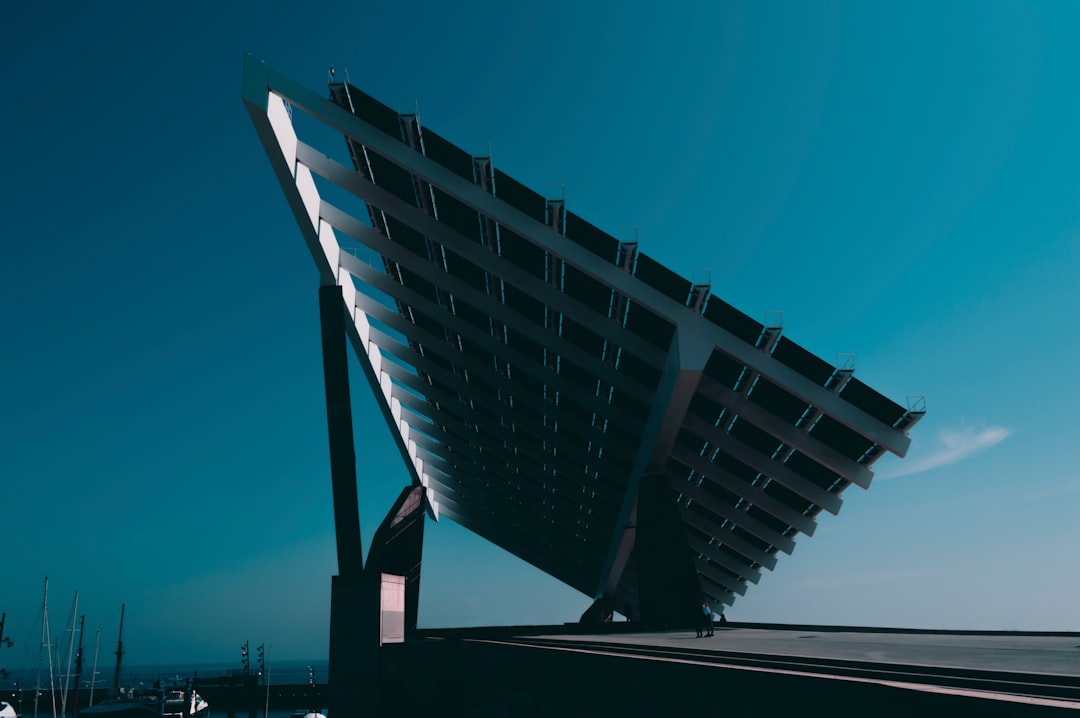

: Prioritizing renewable energy and green data centers is crucial for reducing AI's environmental footprint.

Citations:

- https://www.restack.io/p/energy-efficient-ai-answer-training-programs-cat-ai

- https://techxplore.com/news/2025-01-method-energy-sustainable-ai.html

- https://n3xtcoder.org/developers-energy-impact-of-ai

- https://www.datacamp.com/blog/sustainable-ai

- https://news.mit.edu/2023/new-tools-available-reduce-energy-that-ai-models-devour-1005

- https://accesspartnership.com/12-key-principles-for-sustainable-ai/

- https://www.nature.com/articles/d41586-024-00200-x

- https://pg-p.ctme.caltech.edu/blog/ai-ml/what-is-sustainable-ai-significance-examples

Carrol Bailey

Hey there.

bastaki.co, Your consistency and kindness in this space don’t go unnoticed.

I recently published my ebooks and training videos on

https://www.hotelreceptionisttraining.com/

They feel like a rare find for anyone interested in hospitality management and tourism. These ebooks and videos have already been welcomed and found very useful by students in Russia, the USA, France, the UK, Australia, Spain, and Vietnam—helping learners and professionals strengthen their real hotel reception skills. I believe visitors and readers here might also find them practical and inspiring.

Unlike many resources that stay only on theory, this ebook and training video set is closely connected to today’s hotel business. It comes with full step-by-step training videos that guide learners through real front desk guest service situations—showing exactly how to welcome, assist, and serve hotel guests in a professional way. That’s what makes these materials special: they combine academic knowledge with real practice.

With respect to the owners of bastaki.co who keep this platform alive, I kindly ask to share this small contribution. For readers and visitors, these skills and interview tips can truly help anyone interested in becoming a hotel receptionist prepare with confidence and secure a good job at hotels and resorts worldwide. If found suitable, I’d be grateful for it to remain here so it can reach those who need it.

Why These Ebooks and Training Videos Are Special

They uniquely combine academic pathways such as a bachelor of hospitality management or a master’s degree in hospitality management with very practical guidance on the hotel front desk job duties. They also cover the hotel front desk job description, and detailed hotel front desk tasks.

The materials go further by explaining the reservation systems in hotels, check-in and check-out procedures, guest relations, and dealing with guest complaints—covering nearly every situation that arises in the daily business of a front office operation.

Beyond theory, my ebooks and training videos connect the academic side of resort management with the real-life practice of hotel front desk duties and responsibilities.

- For students and readers: they bridge classroom study with career preparation, showing how hotel and management course theory link directly to front desk skills.

- For professionals and community visitors: they support career growth through interview tips for receptionist, with step-by-step interview questions for receptionist with answers. There’s also guidance on writing a strong receptionist job description for resume.

As someone who has taught hotel and management courses for nearly 30 years, I rarely see materials that balance the academic foundation with the day-to-day hotel front desk job requirements so effectively. This training not only teaches but also simulates real hotel reception challenges—making it as close to on-the-job learning as possible, while still providing structured guidance.

I hope the owners of bastaki.co, and the readers/visitors of bastaki.co, will support my ebooks and training videos so more people can access the information and gain the essential skills needed to become a professional hotel receptionist in any hotel or resort worldwide.

Keep up the great work—your consistency matters.